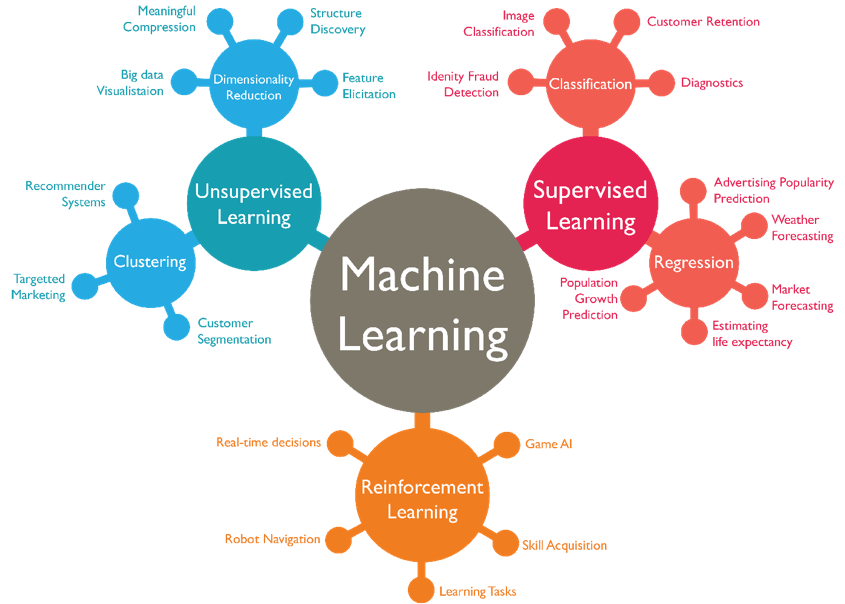

User Behavior Analytics + Network threat detection. The increasing focus on using security data analytics to extract insight and find or predict ‘bad’ has brought with it an influx of marketing promises of close-to-magical results. Among the offenders are those machine learning products suggesting data can be thrown at an algorithm and – BAM! – insight will appear.

To begin to understand Machine Learning and statistics is to first understand analytics and analysis.

This is how Webster’s Dictionary defines “Analytics”:

an·a·lyt·ics

/ˌanəˈlidiks/

noun

plural noun: analytics

- the systematic computational analysis of data or statistics.

“content analytics is relevant in many industries”

- information resulting from the systematic analysis of data or statistics.

“these analytics can help you decide if it’s time to deliver content in different ways”

…and this is how Wikipedia defines “Analytics”:

“Analytics is the discovery, interpretation, and communication of meaningful patterns in data. It also entails applying data patterns towards effective decision making. In other words, analytics can be understood as the connective tissue between data and effective decision making within an organization. Especially valuable in areas rich with recorded information, analytics relies on the simultaneous application of statistics, computer programming and operations research to quantify performance.”

Analytics vs. analysis:

The best explanation between analysis and analytics is also found on the Wikipedia page:

“Analysis is focused on understanding the past; what happened and why it happened. Analytics focuses on why it happened and what will happen next.”

What this translates to a data scientist or engineer is simplified into our work. The queries within our machines to produce findings, correlate and measure those findings to present results in the form of numbers – statistics – graphs – automation actions.

The hype around ‘ML’ in a lot of today’s infosec tooling typically obscures the less glamorous, but fundamental, aspect of data science: data collection and preparation (the latter being about 80 percent of a data scientist’s time). The hard cold truth is, machine learning and other algorithms need to be applied to appropriate, clean, well-understood data to even return valid results. Then, from there constantly re-tuned and validated at intervals that are organic.

The fact that there’s misinformation in security marketing is no surprise, but in an infosec context, a damaging effect is most probable. Infosec has far too many complex, disparate data sets that use automated analysis to pull them together and make sense of what they mean for different stakeholders (the CISO , SecOps, IT Ops, etc).

If machine learning products set expectations too high and then fall short, this reinforces theory of data skeptics– including the people who would manage the security budget. Get it wrong the first time, and you may not get them to buy into a data-driven solution again.

VALIDATING SECURITY ANALYTIC PRODUCT CLAIMS

If you’re buying an analytics/metrics tool that makes big claims about how it can turn your data into mined gold, here are some key pieces of information to obtain before you go ahead:

- What data needs to be ingested by the tool for you to get the promised results?

Some analysis products simply will only work with data that comes from systems tuned in a certain way. For example, if the platform you’re looking to buy uses web proxy data, what level of data transformation is required to get the fields it needs compared to what is active in your solution presently? Will infosec be able to request increased logging? What about the increased storage required?

Others require data from the whole network to give you the visibility you’re looking for. Otherwise, you may be making decisions based only on the alerts you can see, not necessarily on the alerts that matter.

You should educate yourself on information you’ll be making decisions on and how it differs from what is stated in marketing materials – (if there is data or data fields not easily accessed).

Also consider the hoops you will need to jump through to gain access to each source required. Is the data owned by infosec or a third-party, such as Infrastructure or an external vendor? Will you be able to get access to it, in what format – i.e. has the data been transformed in any way? This is important , as this can affect the analysis that is at all possible.

How quickly can you ingest data, and how soon can you ingest it after it’s been created? Could you pull it from the cloud via an API (e.g. threat data), or will the network team have to move logs across your infrastructure (e.g. Active Directory event logs)? A lag between data being generated then being ingested may affect your ability to take timely actions.

- For how long after installation must this data be collected in order for the tool to achieve the promised level of accuracy/effectiveness, and at what point before this will its output be useable?

Machine learning models need to be trained. For example, for a threat detection tool to find anomalous behavior in your network, the model must first be supplied with data which covers all the usual network behaviors occurring over time. More data means more power to detect changes that are truly out of the ordinary. The vendor should advise you of any caveats, related to the training of the model, that you should take into account when using the tool to solve a particular problem at a particular point in time.

- What volume of data will the tool output and what else do you need to turn this into ‘results’ (the presentation layer) that are then actionable by your team?

If your new threat detection solution produces 1,500 alerts daily that then need investigation by SecOps analysts, the people to do that need to come from somewhere. Furthermore, has your vendor indicated how many of those alerts are likely to be “true”?

Machine learning models will always return “false positives,” so be aware of the precision (number of true positives/ (number of true positives + number of false positives)) relative to the chosen algorithm(s) and how much tuning is required to achieve this. If precision is low, your team will be wading through a lot of noise. If it’s high, but the tool requires analysts to do lots of tuning to achieve this, be aware of the dependency you’ll have on skills.

It may not be an issue if you need to ingest seven data sources that are high effort to get access to and move across the network. It may not be an issue if you then need to wait for nine months for an ML model to be trained before you know if your investment delivers a strong ratio of cost to value. But it will be an issue if you don’t know about this when you sign up because these are the factors that will impact the way you approach your investment of time, effort and money – and the way you set internal expectations for what the result of your efforts will be.

The takeaway:

- Be aware of what your needs are, what data types you want to garner information from and specifically what data types and fields.

- Don’t believe all the hype about how ML can apply to “All the Things” and is the magic easy button to find “malicious” and eliminate all the super expensive skilled labor.

- As Albert Einstein states : “Everything should be made as simple as possible, but not simpler.” Which is an homage to Occoms razor. Use ML for data sets as it would implicitly require clean and easy to manage field data – though complex and labor intensive in the requirement of finding anomalies.

- Know how quickly a clean training set can be created – given your data specifics.

- Know how quickly a trained model can be had.

- Know your variables of acceptable precision, what TTPs must be put in place to work those- (human in the loop), and how much data it takes to get there.

- Know what your accuracy requirement for a model re-training interval.

- Does the ML effort meet or beat that of manually mining the data with a SIEM and highly skilled data scientists?

References

SciForce (2019). Anomaly Detection — Another Challenge for Artificial Intelligence. Retrieved from: https://medium.com/sciforce/anomaly-detection-another-challenge-for-artificial-intelligence-c69d414b14db

Powell, L. (2016). Avoid the Infosec Machine Learning Trap: The Data Is More Important than the Algorithm. Retrieved from: https://www.tripwire.com/state-of-security/security-data-protection/avoid-infosec-machine-learning-trap-data-important-algorithm/

Wikipedia. Analytics. Retrieved from: https://en.wikipedia.org/wiki/Analytics